AI-Driven Autonomy and the New Tech Landscape: From Xpeng to In-House Chips and Beyond

Author: Alex Chen

The last few years have accelerated a broad shift toward artificial intelligence as the engine of progress across multiple frontiers of technology. From autonomous vehicles and smart construction sites to in-house chip design and biotech research, the common thread is a mix of camera-based AI perception, shared data ecosystems, and increasingly capable processing hardware that enables more ambitious software. Several recent articles illuminate this trend from different angles: Chinese automakers pursuing autonomy with camera-based stacks, multitier hardware strategies for consumer devices, smart construction that keeps complex projects on schedule, and exploratory biotech work that tests AI-driven approaches in life sciences. Taken together, these pieces sketch a landscape in which AI is not a single upgrade but a platform for rethinking how we design, build, and regulate complex systems. Yet the rapid expansion raises questions about safety, ethics, labor markets, and governance as industries push AI from lab experiments into everyday use.

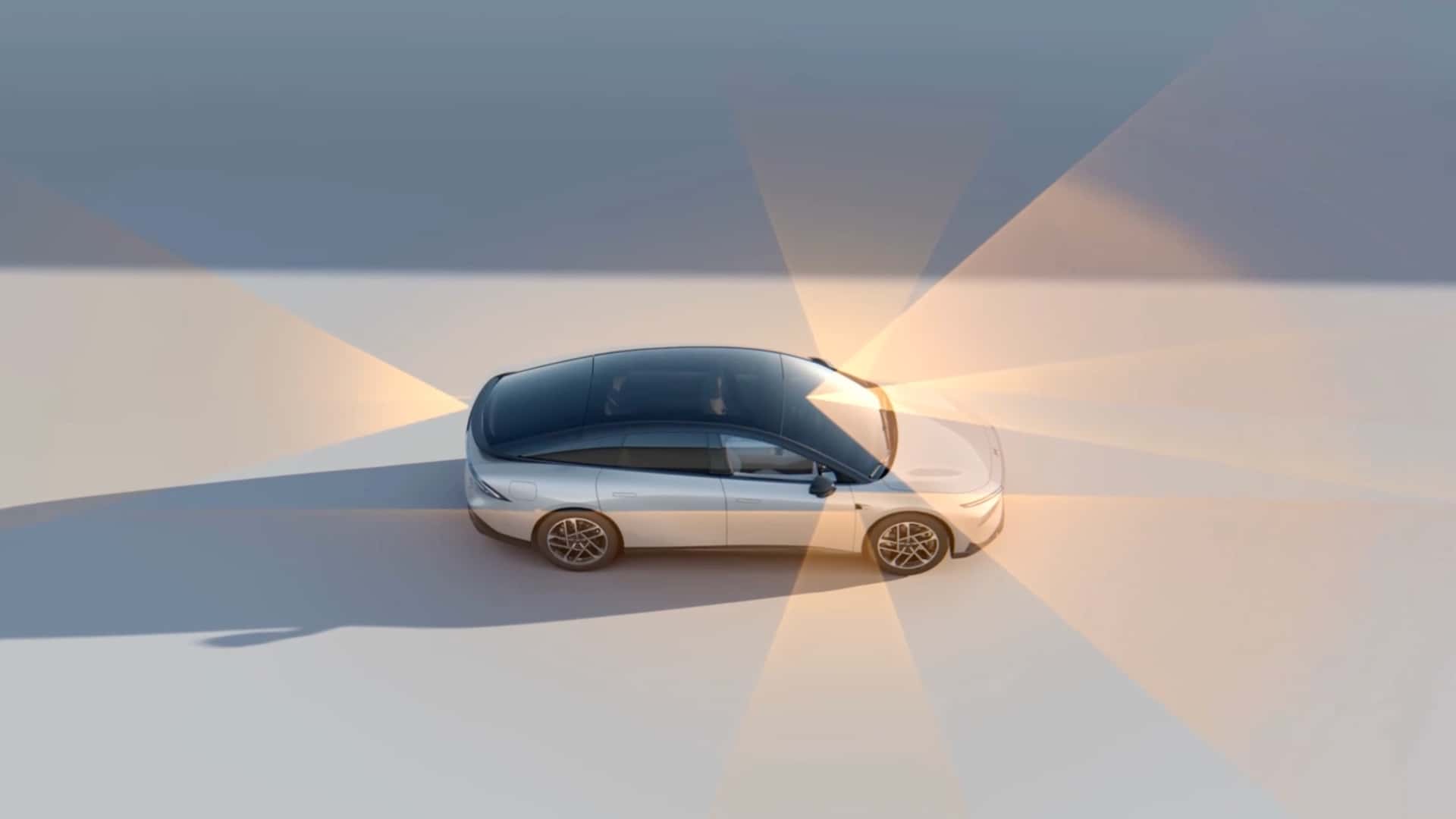

In the automotive sector, the debate about autonomy has become particularly salient. A prominent thread is the move by several automakers—most notably in China—toward camera-based perception paired with AI-driven decision-making rather than relying on heavier, sensor-dominated stacks. While Tesla’s approach has drawn controversy in many markets, the broader industry is embracing similar strategies that prioritize high-quality vision, robust AI models, and real-time data integration. Insideevs and other outlets highlight how Xpeng and its peers are chasing level of autonomy using cameras, edge processing, and cloud-informed updates, a mix intended to reduce costs and accelerate deployment. The practical implications are clear: as more firms lean into camera-centric stacks, the regulatory environment will demand new safety assurances, independent testing, and transparent accountability for the behavior of autonomous systems. The broader point is not that one approach is universally right, but that many companies see autonomy as a software problem at its core, with hardware serving as a flexible, upgradeable platform.

Xpeng's XGN P autonomous-driving research showcased by InsideEVs, highlighting a camera-based stack and AI-driven perception.

Meanwhile, the tech industry’s broader trajectory shows that autonomy is inseparable from hardware strategy. Industry observers are increasingly discussing how in-house chip initiatives—whether in smartphones or AI accelerators—shape the capability and cost of AI features. The Apple and Google/A I narratives are instructive: Apple’s long-standing strategy of designing its own chips has given the company tighter control over performance, power efficiency, and AI capabilities across devices. AppleInsider’s reporting about Apple’s in-house silicon underscores how new architectures can amplify AI tasks while also enabling a more cohesive software ecosystem. At the same time, the device market’s AI arms race—exemplified by the Pixel line’s AI features—shows that chips are not just accelerators but critical levers for competitive differentiation. The take-away is that as hardware and software co-evolve, AI-enabled autonomy will increasingly depend on specialized computing layers that can be tuned for safety, privacy, and efficiency, with companies balancing control, openness, and regulatory compliance.

From the construction industry to smart cities, AI-driven processes are reshaping how projects are planned, monitored, and delivered. A striking case comes from Tengah, Singapore, where builders reportedly used 24/7 shift rotations and AI-based monitoring to keep a complex residential development on track. The Straits Times coverage describes how channeling resources into digital planning, automated scheduling, and constant supervision helped minimize delays and deliver amenities to residents on schedule. This approach illustrates how AI can translate urban development ambitions into tangible outcomes—shorter lead times, fewer overruns, and better alignment with resident expectations. Yet it also raises questions about labor displacement, safety oversight, and data governance on-site. As with autonomous vehicles and consumer devices, the practical promise of AI in construction hinges on robust validation, transparent processes, and careful integration with human teams on the ground.

A Straits Times report highlights how AI-enabled monitoring helped Tengah’s construction stay on schedule while delivering amenities to buyers.

Beyond the engineering halls and construction sites lies the frontier of life sciences where AI-assisted design is being explored. A Biztoc report summarizes a controversial but provocative line of inquiry: scientists used AI models to design bacteriophages—viruses that can target specific bacteria. The claim says the study, not yet peer-reviewed, describes how AI analyses and generates sequences to hunt harmful microbes. The potential payoff is significant: faster discovery of treatments and more precise therapeutics. But the work also triggers ethical debates about safety, dual-use applications, and governance over AI’s capability to alter biological agents. The piece serves as a reminder that AI’s reach extends into fields with profound consequences, demanding careful oversight, rigorous peer review, and clear risk management frameworks as the science progresses.

AI-designed bacteriophages: a promising frontier in biotech, but one that requires careful assessment of risk and governance.

The societal implications of AI-driven change go beyond engineering and biotech. A Fortune article on Gen Z and the job market notes a striking statistic: the share of Americans with at least a bachelor’s degree has climbed to about 37.5 percent, up from roughly 25.6 percent in 2000. The broader debate about AI’s impact on entry-level opportunities is ongoing, with voices on both sides arguing that automation could compress or recalibrate early-career prospects. The data point about education levels provides context for this discussion: as more workers accumulate credentials, competition becomes stiffer for traditional “first job” roles, pushing policymakers and employers to rethink training, apprenticeship models, and the pathways that help young workers translate credentials into livelihoods. Taken together, the AI trajectory suggests a dual reality: opportunities for more capable tools and job roles, alongside a need for targeted training that aligns with the new automation-enabled economy.

Fortune’s coverage highlights how higher education attainment intersects with evolving job opportunities in an AI-enabled economy.

As the day-to-day operations of large-scale projects and public life become more instrumented by technology, regional and cultural contexts shape how AI is adopted. A recent piece on Navaratri festivities in India discusses how cutting-edge technology is being used to streamline logistics, information dissemination, and visitor experience at Indrakeeladri. The Dasara 2025 app is cited as a central tool providing real-time information on routes, services, and crowd management, underscoring how AI-enabled apps can improve safety, reduce friction for pilgrims, and support large-scale cultural events. Alongside these public-sector deployments, a separate feature about a young innovator—aged 15—who created an “ice scalpel” to revolutionize trauma medicine illustrates how AI-inspired thinking often travels alongside tangible hardware and novel materials. This combination—systems-level AI, hardware innovation, and human ingenuity—drives a broader narrative about a future where technology augments human capability in diverse and sometimes unexpected ways.

Cutting-edge technology supports Navaratri 2025 at Indrakeeladri, reflecting how AI-assisted planning improves large-scale cultural events.

The frontier of innovation is not limited to AI-powered autonomy or corporate chips; it also thrives in grassroots invention. A 15-year-old innovator’s ice scalpel concept demonstrates how young minds apply scientific curiosity and engineering to urgent medical needs. While ideas like the ice scalpel may not immediately reshape trauma care, they emphasize a broader pattern: rapid iteration, access to data and tools, and a willingness to explore radical approaches can accelerate breakthroughs when backed by research, funding, and mentorship. Taken together, these diverse threads suggest a future in which AI and related technologies create new opportunities, but also demand careful stewardship—from ethical governance and responsible innovation to workforce retraining and inclusive policy design.

Ultimately, the emerging AI-enabled landscape will require a convergence of disciplines, governance, and practical know-how. The topics touched by these articles—autonomy in vehicles, in-house hardware platforms that power AI, smart construction and city infrastructure, biotech exploration, evolving labor markets, and community-facing deployments—point to a future in which technology acts as an amplifier for human potential. The challenges ahead are substantial: ensuring safety and accountability in autonomous systems, balancing the need for innovation with robust ethical oversight, rethinking education and training for a borderless automation economy, and building governance frameworks that address both opportunity and risk. Yet the common thread across these examples is resilience through thoughtful design, transparent collaboration between industry and society, and a belief that technology, when steered responsibly, can deliver tangible benefits across mobility, housing, healthcare, culture, and work.